For a lot of persons (me included), one of the reasons to lose motivation to learn something new is the unanswered question: why do I need that?

With matrices in the core of neural networks and deep learning, I will try to explain in a short text the utility of these things. Why do we need symbols for numbers?

Instead of using the symbols 1, 2, 3, and so on, we could count stuff using dots, or any repeating pattern like 1=, 2=, 3= etc. It’s obvious why this is not a good idea; with countable things in the order of hundreds or thousands, that wouldn’t be suitable or reasonable.

Why do we need function notations?

We can understand functions roughly as rules that takes one or more values, and returns another one, like the function x² that squares every value it takes. Functions are often denoted by f(x), so why do we need to use this notation instead of the rule itself? There is some reasons, but one of them is similar to why we use symbols to represent numbers instead of repeating patterns, because often the function can be so big, that write and deal with the giant thing every time would be time consuming and impractical.

Finally, why do we need matrices?

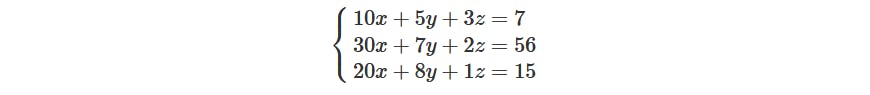

Working with matrices is a way to deal with a lot of numbers at the same time in a reduced space and practical way. Suppose we have a system of equations:

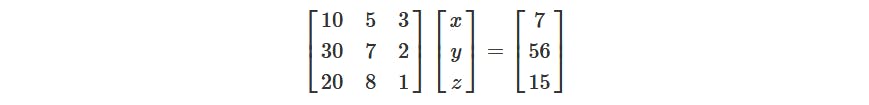

We can see that it has a repeating pattern, that for every equation, we have values multiplied by x, y and z. With matrices we have a way to write this system without repeating these and in a much more elegant way:

With this, adding one more equation to the system is a matter of adding numbers to the first and the last matrix, without touching the [x y z] matrix, since they keep repeating. (Those who already did endless exercise lists of equation systems knows how boring is to keep writing x, y, z over and over and over hehe)

Neural networks often use too many values and operations, and write down every one of them would be impractical, so we need a way to compact things as much as possible to make them easier. Matrices help us with it.

Another reasons

Of course, these are not the only reasons to use function notations and matrices:

- With these we can write theorems and equations that generalize to any rule or any quantity of values.

- Another nice reason is that matrices are cpu/gpu friendly; computers take advantage of matrices to speed up processing their expressions.

A great resource to learn about matrices and linear algebra is this series of video from the youtube channel 3blue1brown:

If you have questions or comments, please leave them below!